“My Cars Don’t Drive Themselves”:

Bee-Bot Research with Preschoolers

Preschoolers’ Guided Play Experiences with Button-Operated Robots

Authors: Jacob Hall and Kate McCormick

This story contains excerpts from the full research article, published on April 15, 2022, in TechTrends journal.

The goal of this research project was to describe what preschool children’s computational thinking (CT) experiences are like when button-operated robots are introduced into their guided play. We explored the emergence of CT skills during guided play with Bee-Bots.

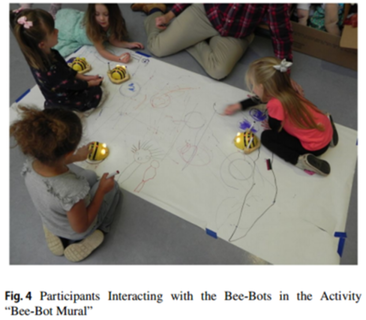

During this 6-week program, 29 children ages 3 to 5 from an early childhood center participated. Data collected included audio-visual recordings, observations, child focus groups, and child-generated artifacts. The findings suggest children constructed meaning across the CT dimensions, connected with others through dialogue and negotiation, and used guidance from adults to extend their learning.

Research on how to design CT experiences for young children is needed to ensure programs effectively support diverse learners with developmentally appropriate approaches. Therefore, to investigate young children’s CT experiences, we employed a guided play approach that allowed children to be self-directed and semi-autonomous in their play with age-appropriate button-operated robots.

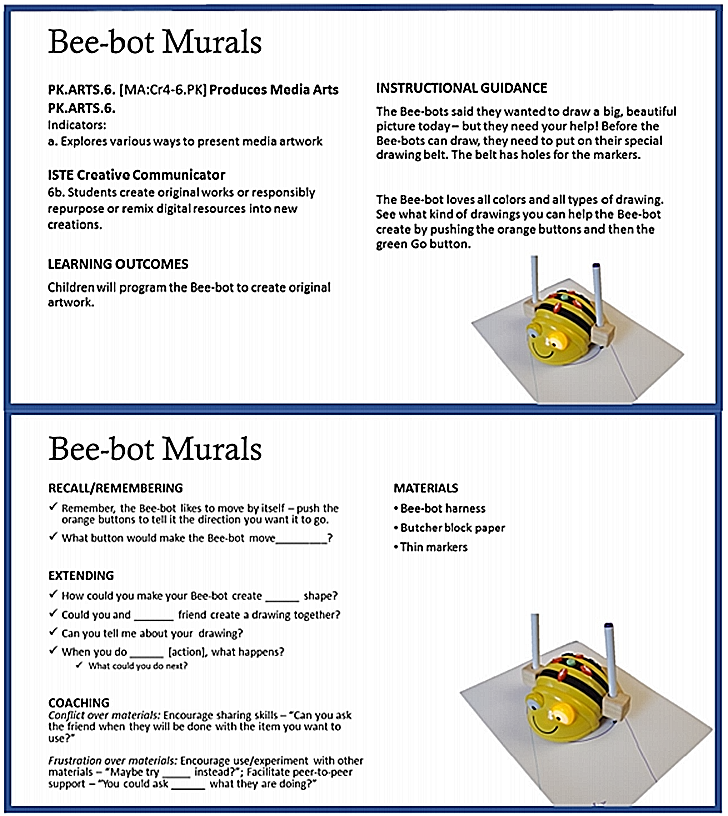

Children were encouraged to visit the CT center when it was open but were never forced to engage in the activity. Each activity included a structured “Invitation to Play” card, one Bee-Bot per participating child (up to 6 children), and associated props, such as this tunnel.

Fig. 1 Sample “Invitation to Play” card for Bee-Bot mural activity (front and back).

In examining how preschool children experienced guided play with button-operated robots, the analysis of video data, photographs, and child focus groups resulted in three primary findings.

- Preschool children constructed meaning across CT dimensions (i.e., concepts, practices, and perspectives), and these meaning-making experiences were informed by feedback from the Bee-Bot.

- The children connected with others through dialogue and negotiation with peers.

- They used guidance from adults to extend their learning.

“Bee‑Bots Are Driving Crazy”—Making Sense of the Robot’s Actions

Making sense of the concepts of event and sequence were essential elements in children’s debugging experiences. Some uncertainty occurred within children’s play often due to programs not being cleared. Being that children could come and go from the center, this movement resulted in robots – with a sequence still in their memory – being passed from one child to the next. At other times, children may have begun with a clear Bee-Bot memory but forgot to continue clearing its memory as they played. They then expressed frustration when the enacted sequence did not match what they anticipated.

When children experienced a stationary robot, the feedback loop from the Bee-Bot appears to have contributed to making sense of CT concepts.

Researcher-facilitated focus groups provided a unique opportunity for children to consider Bee-Bot’s actions.

Case 2: Focus group excerpt

Henry (3y, 2 m): My cars don’t drive by theirselves

Researcher: What?

Henry: My cars don’t drive by themselves

Researcher: Your cars don’t drive by themselves?

Henry: Yeah…

Researcher: And the Bee-Bot does?

Henry: Yeah…

Jamison (3y, 7 m): The Bee-Bots do drive by themselves

Researcher: They do sort of, but who tells them where to go?

Jamison: Me

Remy: Us…

Researcher: Does the Bee-Bot come up with its own idea on where to go?

Jamison: No. No, never

Researcher: Are you sure he doesn’t?

Jamison: No

Researcher: Well, how does he know where to go?

Jamison: I don’t know

Henry: We tell it where to go

Researcher: Like in this picture, how does this Bee-Bot know where to go?

Henry: [The child] tells it where it goes…

Eventually, the children concluded that the Bee- Bots drive themselves but only go wherever the children tell them to go.

Case studies were videotaped to document the thought processes involved. Here is one example:

Testing and Debugging—Computational Practice

Case 7: Lily sets her bot down in front of the tunnel. She lightly presses Forward and then more firmly presses Go. The Bee-Bot indicates receiving the Go command by blinking its eyes and beeping, but it did not receive the Forward input. The Bee-Bot does not move. Lily appears confused. She presses Go again, but the Bee-Bot does not move, since it never received the initial Forward command. She tries to pick the bot up with one hand, but it slips and turns over. She picks it up with both hands and brings it close to her face to look into its eyes and make sure it is on. She turns around and looks toward the researcher for assistance. The researcher joins her and asks what she has pressed. She demonstrates that she pressed Forward then Go and explains that it did not move. The researcher clears the bot and asks how many times Lily thinks she would need to press Forward for the bot to move through the entire tunnel. Lily responds, “Six!” Appearing more confident, she firmly presses the Forward button 6 times, then presses Go, and exclaims “Oh! It went through!” (video data)

Creating Meaning and Computational Artifacts Together

In this setting, the children could learn from each other, help debug programs, model their knowledge, and encourage one another to attempt new sequences. Also evident in their interactions was the motivation to create for others. The children would eagerly call out to friends and show their peers a sequence they had created or invite them to join their robots in pretend play.

Jade noticed that Jonathan’s bot was only moving in a straight line. “Guess what? It can turn!” Jade excitedly said to him. Jonathan appeared to be fully engaged as he leaned forward, vigorously chewing his tongue, and pressed Forward many times followed by Go. As the robot moved forward, Jonathan’s eyes followed its movements, and his body crawled alongside it. “It can turn. It can turn. Watch,” Jade encouraged Jonathan as she picked up his bot to demonstrate this newly learned sequence.

“How did you make it do that?” Guidance from Adults

The children connected with facilitators by sharing how they were playing with the robots. As children made meaning of their experiences with the robots, they used guidance from adults to extend their learning. The facilitators primarily intervened in the play when a child specifically requested help, exhibited moderate to severe frustration, or in instances of peer conflict. “How do you make it do that?” This invitation to explain their thinking provided another opportunity for children to externalize their CT understandings and created spaces for reflecting on their play with the robots.

Let’s Figure this Out Together: The Importance of Peer Dialogue

Research has shown that in 21 studies of apps aimed at developing young children’s CT, none of the activities with the apps promoted collaboration and sharing. Instead, they noted that “in all studies, coding seems to be a solitary activity.” Conversations between peers were an essential component of children’s play and their emerging CT understandings in this study.

The results evidenced that children invited others to join them in their play, helped peers debug errors, modeled how to operate the robot, taught peers new sequences, discussed the meaning of observations, and inspired their peers’ creations.

For complete references, project methodology details, and additional case stories, please refer to the full research article.

Read Dr. Hall’s other customer story about using Bee-Bots with first-grade students in a lesson about drawing geometric shapes.

Contact Information

| Name | Jacob A Hall, Ph.D. |

| Position | Associate Professor, Childhood/Early Childhood Education Department |

| School | State University of New York (SUNY), Cortland |

| Location | Cortland, NY |

| jacob.hall@cortland.edu | |

| Tags | Bee-Bot, Research, New York |

| Age | Preschool, ages 3 to 5 |